We all have a yearning for our environments to be more natural.

But since the rise of the world wide web, our lives have been increasingly spent staring at screens.

How can we continue our technological revolution, but do so in a way that supports our mental health and well-being?

That’s the exact question that’s fueling our search for more intuitive and immersive ways of engaging with technology.

This article explores the different ways people interact with computers and how it’s changed over time.

Did you know?

To help teams implement Design Thinking, we offer bespoke innovation training workshops. Talk with us and find out how we can help transform the way you design your products and services.

Interested? Message us in the bottom right corner or learn more here.

15 Definitions and Types of User Interface

Intelligent User Interfaces

Intelligent user interfaces use artificial intelligence technologies and machine learning to create personalized experiences for users. The technology is used to understand and interpret the user’s intentions and context before providing a tailored or guided experience.

Some examples of intelligent user interfaces could be chatbots, voice assistants, and recommendation systems. One of the more popular executions of this type of technology is personalization engines which are now embedded into many enterprise solutions.

Batch Interface

A batch interface takes a single command from the user and processes several tasks simultaneously without further user interaction. They’re useful for processing large amounts of data and completing lots of tasks that lend themselves well to analysis or automation.

Early computers ran on batch interfaces since programmers would feed the computer a set of instructions and then let the computer run to process the results. More modern examples would include auditing transactions in financial institutions or automated software testing.

WIMP Interface

WIMP stands for ‘Windows, icons, menus, pointer” and refers to a graphical user interface that uses these elements to allow the user to interact with the system. For example, laptops and desktop computers that use a mouse and computer keyboard are examples of a WIMP interface.

Although it’s the most popular type of user interface, it is still considered clunky and inefficient since it’s not an entirely ergonomic system. For lack of a better solution, it’s the most versatile out there, but people are now exploring other ways, like voice and tangible interfaces.

Tangible User Interface

A tangible user interface uses physical objects and touch as a primary means of interacting with the technology. Unlike the WIMP interface, which uses a mouse and a computer. Tangible interfaces have components that can be touched, moved, and manipulated to control the tech.

TUIs usually take the form of tabletop displays and interactive walls that use sensors to interpret the user’s actions into commands. We see a lot of these interfaces in science fiction movies where characters control technology by moving objects on a table or interacting with holograms.

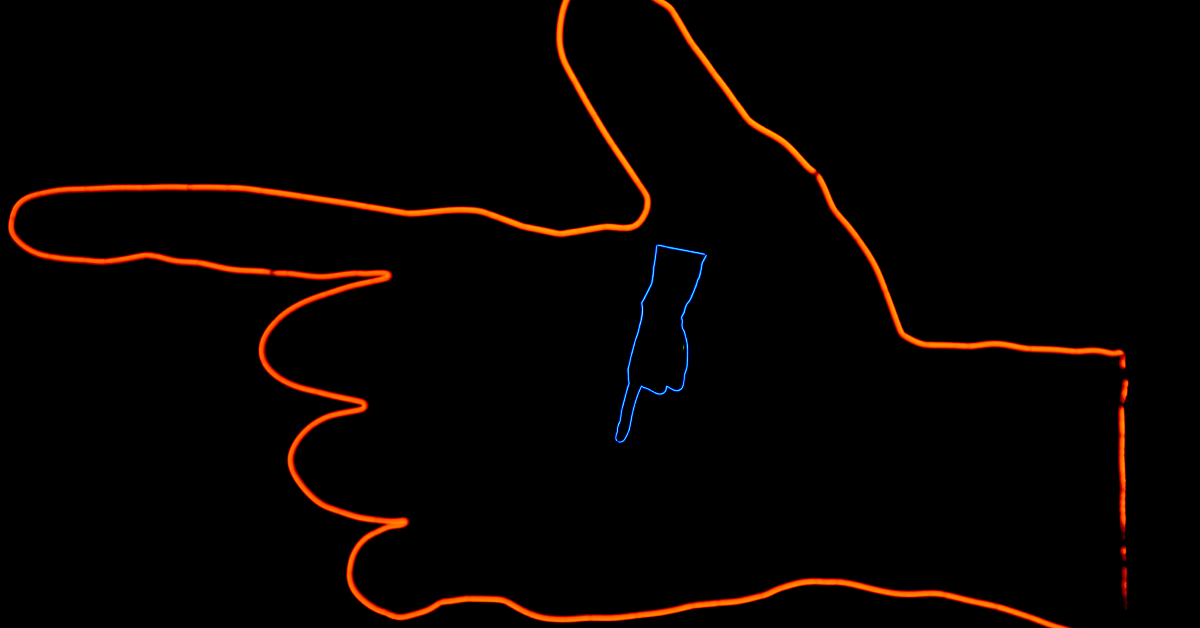

Kinetic User Interface

A kinetic user interface is another example of a tangible user interface that relies on gestures and motion-based commands. These interfaces use cameras and depth sensors to interpret movements and gestures to create immersive and natural user experiences.

KUIs are used in gaming, education, and art installation to allow users to control their environment simply by moving their bodies and speaking. They’re particularly useful for the control of virtual reality environments and gesture-based control of robots.

Natural User Interface

Natural user interfaces combine a few of the previously mentioned technologies to create a seamless interface between humans and technology. The goal is to make it easier for people to interact with systems without the need for traditional inputs like screens, keyboards, and mice.

NUIs take many forms, including voice assistants and gesture-based interfaces, but they combine them in a way that can be invisible and completely natural for the user. They interpret spoken commands and use machine learning to understand user’s context and requests.

Organic User Interface

An organic user interface aims to use inputs that are as close to organic organisms and the real world as possible. They mimic organic forms or systems like cells, organisms, and ecosystems. They have design elements that are inspired by the forms and behaviors found in nature.

OUIs are most often used to create immersive and engaging experiences in art installations and interactive media. They’re a great educational tool and can be used to explain difficult concepts and ideas in science and education through visuals and soundscapes.

Touchless User Interface

Many of the previously mentioned technologies would be considered touchless interfaces. This is a category of interface that allows users to interact with a system without physically touching a screen or input device. Instead, they use sensors to detect user’s gestures, movements, and commands.

These interfaces are being explored as ways to remove the barrier of physical inputs that make experiences in gaming and entertainment less immersive. The goal is to create a simpler and more intuitive way for people to interact with systems and technology.

Touch User Interface

A touch interface requires the user to interact with the technology with a touch screen or other touch-sensitive surfaces. Touch interfaces use sensors to detect the location, movements, and pressure of a user’s finger or pointing device on a screen and turn it into commands and actions.

They’ve become increasingly popular with the introduction of smartphones, tablets, and wearable devices. They’re an excellent way of allowing users to interact quickly with visual displays while they have the attention and focus to give to a screen.

Voice User Interface

A voice interface allows users to interact with the system through voice commands and uses speech recognition to turn those commands into actions. They use natural language processing and artificial intelligence technologies to allow people to control the operating system using only their voice.

VUIs have become popular in recent years with the rising spread of smart speakers and voice-activated devices like Siri, Alexa, and the Xbox. They’re particularly useful for performing tasks that can be understood using short, concise voice commands like answering questions.

Natural Language User Interface

A natural language interface would be a part of many of the previously mentioned systems where the need for understanding and interpreting user’s speech or speaking to the users in a natural way is needed. They use AI tech and machine learning to process language as it’s naturally spoken.

Most recent examples of this include Ai chatbots like Chat GPT. They’re game changers for knowledge workers that require support with their thought processes. They can help you understand ideas, brainstorm solutions, or process and synthesize a large data set.

Terminal User Interface

A terminal interface or command line interface. Used by experienced users. Is a type of user interface design that allows you to interact with it by typing commands and receiving a text-based output. They’re used for systems administration and technical tasks in software design, development, security, and other related areas.

They provide a powerful way for developers in software engineering and systems administrators to give specific commands to help complete a wide range of tasks, including managing files and directories, running scripts and programs, and configuring operating system settings.

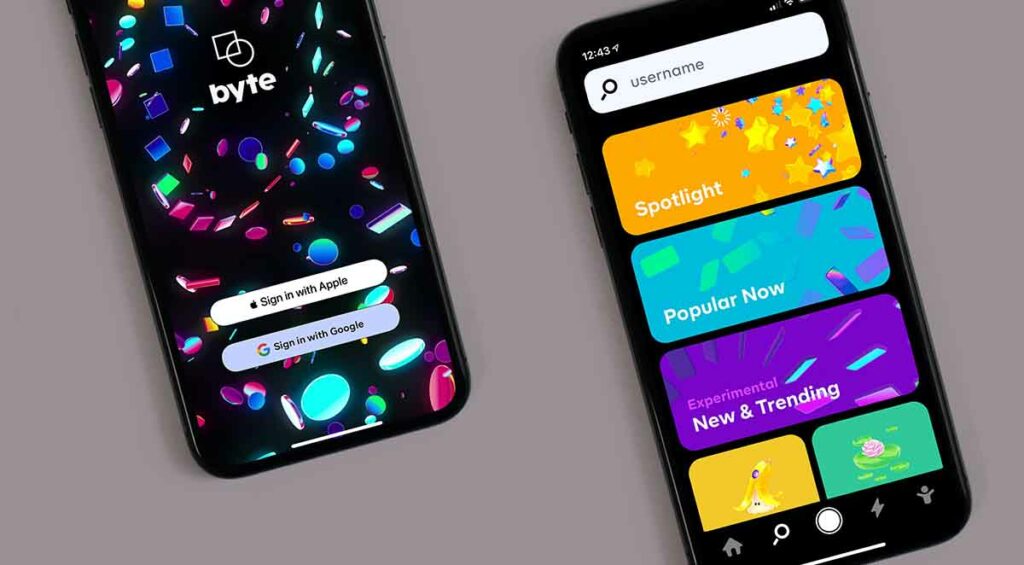

Graphical User Interface

The majority of user interfaces mentioned here would be considered a GUI interface. They allow users to interact with a computer using visual elements like a graphical icon, buttons, and windows presented on a screen display. The touchscreen graphical user interface has become very popular since the rise of tablets, smartphones, and wearables.

This type of user interface design revolutionized computing technology and have become the primary way for us to interact with computers. Even as this technology advances into VR and AR, the graphical user interface is likely to become more advanced and innovative since the need for visuals will always be strong.

Menu-Driven User Interface

A menu driven interface allows users to interact with a series of menus to navigate a file structure or directory in order to locate features and functions with a graphical user interface. They can provide logical structures to complex directories and provide a relatively intuitive means of interaction.

MDUIs are used in cars to navigate the system’s settings and functionality. Similarly, they’re used in watches for similar tasks. Since these devices have small screens but lots of functionality, menus allow users to navigate them through progressive disclosure so they can continue to focus on their real-world tasks without losing their place in the device.

Form-Based User Interface

A form-based user interface is a type of graphical user interface that presents the user with. a series of forms or templates that capture user data step by step. A form-based UI uses text boxes, dropdown menus, and checkboxes to capture user input in a structured and logical way.

Form-based UIs are useful in applications requiring the user to input lots of data in a specific way. For example, a loan application, visa application, or setting up the configuration of an operating system or piece of software.

The History of User Interfaces

1945: The batch interface was one of the earliest user interface forms. This meant that a series of commands or tasks were run in batch mode without the user being able to interact directly with the computer. A computer program is run in this way since the system interprets a batch of commands to run the computer program.

1969: The command line interface was introduced. This allowed users to interact with the computer using text-based commands. This significantly improved the batch interface because it allowed users to perform tasks in real time.

1985: The text-based user interface was introduced. This improved the command line interface by providing a more graphical way of interacting with the computer. It used windows, icons, and menus to represent data and actions.

1968: The graphical user interface (GUI) was introduced, which marked a major turning point in the evolution of UI design. The GUI allowed users to interact with the computer using a graphical elements and the representation of data and actions rather than text-based commands. This made computing more accessible to a wider audience and laid the foundation for modern user interfaces.

Key Takeaways

- Intelligent User Interfaces use artificial intelligence and machine learning to create personalized experiences for users.

- Batch interfaces take a single command from the user and process several tasks simultaneously without further user interaction.

- WIMP stands for ‘Windows, Icons, Menus, Pointer’ and refers to a graphical interface that uses these graphical elements to allow the user to interact with systems.

- Tangible User Interfaces use physical objects as the primary means of interacting with technology, while Kinetic Use Interfaces rely on gestures or motion-based commands detected by cameras or depth sensors.

- Natural User Interface combines voice assistants and gesture-based interfaces in an invisible way, while Organic User Interface mimics organic organisms or systems like cells, organisms, and ecosystems in art installations or interactive media displays.

- Touchless Users require no physical inputs such as screens/keyboards/mice; Voice Users interact through voice commands; Natural Language Users can understand spoken language; Terminal & Graphical are two popular types of UIs used today, while Menu Driven & Form Based UIs capture data step by step using text boxes/dropdown menus/checkboxes respectively.

- The fundamental principles of UI Design that govern a good user interface, haven’t changed much over the years but the UX Design of the tech and user experience is getting closer to seamless interactions.

- Since the rise of the world wide web, computer science and web design have demanded more flexible and intuitive systems to help them create new technology and products for customers.